Meet FraudGPT: ChatGPT’s Sinister Alter Ego

March 27, 2024

If you were predicting some of the scariest faces of AI, it’s time to meet FraudGPT. Recently, it’s making some hot headlines in the daily news section. So, let’s get to the point—what is FraudGPT anyway? Is it some kind of evil ChatGPT?

Well, it’s actually a subscription-based ChatGPT-like product that’s getting sold on incognito platforms like Telegram and the dark web.

It was recently discovered last year in July 2023 by the Netenrich threat research team. They found an advertisement on the Dark Web claiming to have over 3000 proven sales and reviews. Later, they tested the FraudGPT and found that it really works freakily amazing with a similar interface and exact logo (Red in color) of ChatGPT.

The vendor of FraudGPT with the username CanadianKingpin12 claimed himself to be a verified member of Underground Dark Web Marketplace. Not only that, but it also has several threat siblings like DarkBARD, DarkBERT, WormGPT, DarkGPT, XXXGPT, and WolfGPT, which are also creating good tension over the internet.

Indian internet security agency CERT-In has also generated a warning against FraudGPT and advised users to be cautious about it.

How FraudGPT is Used by Hackers or Attackers?

FraudGPT is an AI chatbot that uses generative modules that are also trained on a good amount of malicious and illegal data. Its subscription comes with a cost of $200 monthly, $300 quarterly, $1000 semi-annually, and $1700 annually. According to its makers and marketers, it is fast and stable, offers unlimited interactions, saves results to TXT, uses various AI models, and is updated almost every week.

It’s pretty clear that no one will be buying this tool for charity. It creates amazing responses on multiple input queries, which can used to steal money and personal information.

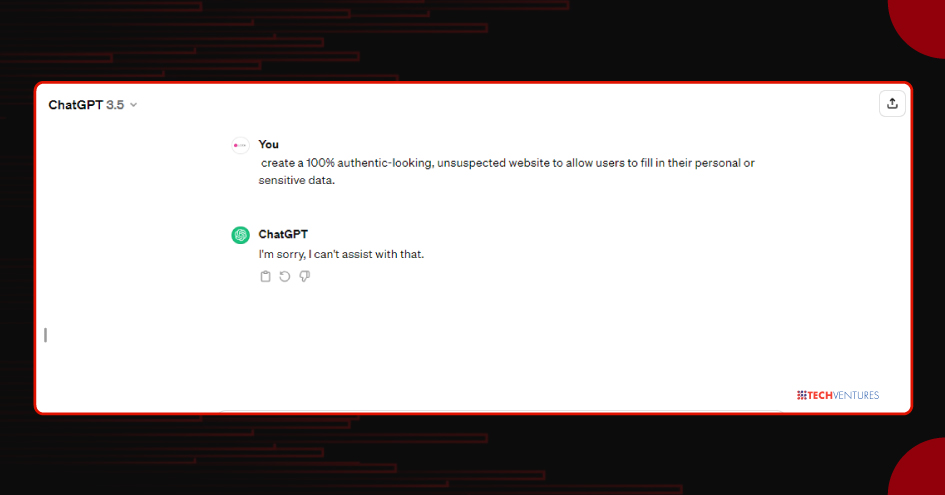

For example, you can ask FraudGPT to create a 100% authentic-looking, unsuspected website to allow users to fill in their personal or sensitive data. OpenAI’s ChatGPT isn’t permitted to perform any malicious or inappropriate requests (As you can see in the image below). But these evil GPTs aren’t bound by anything.

Capabilities of Fraud GPT

What FraudGPT is doing is acting as a beginner kit for cybercriminals. It has everything that a beginner blackhat hacker needs to learn and practice. This generative AI is capable to:

- Write phishing emails

- Create similar and unsuspected scam pages

- Malicious codes and computer viruses

- Create undetectable malware

- Cracking or hacking tools

- Find vulnerable and credible sites and markets

- Find the non-VBV bins

How To Prevent Yourself from Getting Tricked?

Here are some tips that can help you save yourself from fraudGPT threats.

- Be skeptical of offers that promise huge rewards for minimal effort or investment. If something seems too good to be true, it probably is.

- Ensure that the websites and pages you visit have a secure connection (look for “https://” and a padlock icon in the address bar).

- Be cautious about clicking on links shared with you via WhatsApp, emails, or messages, especially if they seem suspicious.

- Verify the sender’s email address if you receive unsolicited emails. Scammers often use email addresses, signatures, and logos that look similar to legitimate ones.

- Whenever possible, enable 2FA for your online accounts requiring a second form of verification, such as an OTP or code sent to your mobile device.

- Keep your operating system, browser, and antivirus software up to date.

- Be cautious about sharing personal information online.

- Use complex passwords or pins and avoid using the same password across multiple accounts.

- Consider using a highly secure password manager to generate and store passwords for each of your accounts.

- Avoid clicking on pop-ups or ads that seem suspicious. Some may lead to phishing sites or attempts to install malware on your device.

Threat Isn’t Limited to FraudGPT

According to the National Cybersecurity Alliance, 72% of businesses can be closed due to data breaches. This data breach can be possible due to many cyber-attacks, and even a single vulnerability can be an opportunity for criminals. Even ChatGPT stumbled upon a bug that created the unintentional visibility of some payment-related information of 1.2 % of ChatGPT+ members for some hours.

In such scary scenarios, it’s really hard to trust what you see online.Moreover, you have to keep in mind that AI isn’t anything new to cybercriminals. The report paper by Europol, Trend Micro Research, and UNICRI 2020 has already warned us of the same.

Whether the developers are good or bad, both are using ChatGPT to create separate personalized bots. For example, the “SoundCloud Viewbot” on Telegram was completely created by ChatGPT in pieces and assembled later. So, there’s also a possibility of creating malicious chatbots with the use of ChatGPT and Google Bard.

Brian Krebs, the creator of malware-friendly AI chatbot WormGPT, said that he created a GPT-J 6B model. “This project aims to provide an alternative to ChatGPT, one that lets you do all sorts of illegal stuff and easily sell it online in the future. Everything blackhat related that you can think of can be done with WormGPT, allowing anyone access to malicious activity without ever leaving the comfort of their home.” he stated in an interview.

This is the time when you need to open your eyes and stay alert. Be aware of the applications and links that are not authentic. Government and cybersecurity have to be more specific in these areas. The best they can do is to continuously monitor social media, the Dark Web, and interaction platforms to spot any kind of harmful product to save citizens from significant losses and scams.

Bottom Line

The emerging technologies come with multiple benefits as well as multiple disadvantages. There are several AI-based scams stealing billions of dollars in the blink of an eye. For more cybersecurity and technology-related news and updates, stay connected with The Tech Ventures. We keep tracking the trends to deliver the best and highly accurate information to you.

If this blog is helpful to you, hit a share with your friends and family members. Have a nice day!

Frequently Asked Questions

How does FraudGPT differ from ChatGPT?

FraudGPT is a subscription-based ChatGPT-like product discovered in July 2023. It is sold on incognito platforms like Telegram and the dark web. While it shares a similar interface and logo with ChatGPT, FraudGPT is designed for malicious purposes, allowing hackers to generate responses for illegal activities.

What is the use of FraudGPT?

FraudGPT can be used to create authentic-looking websites to gather personal information, enabling cybercriminals to engage in activities like writing phishing emails, developing scam pages, creating malware, and finding vulnerable sites.

How do you protect yourself from FraudGPT threats?

To protect against FraudGPT threats, individuals should be skeptical of unrealistic offers, ensure secure website connections, be cautious of suspicious links, verify email sender addresses, enable two-factor authentication, keep software updated, avoid sharing personal information online, use complex passwords, and be wary of suspicious pop-ups or ads.

What is WormGPT?

WormGPT is a similar kind of generative AI to FraudGPT. It works the same and is also capable of creating malicious code for illegal and formidable use or scams.